We assume that we’re sorting a total of nn elements in the entire array.

- The divide step takes constant time, regardless of the subarray size. After all, the divide step just computes the midpoint qq of the indices pp and rr. Recall that in big-Θ notation, we indicate constant time by Theta(1)Θ(1).

- The conquer step, where we recursively sort two subarrays of approximately n/2n/2 elements each, takes some amount of time, but we’ll account for that time when we consider the subproblems.

- The combine step merges a total of nn elements, taking Theta(n)Θ(n) time.

If we think about the divide and combine steps together, the Theta(1)Θ(1) running time for the divide step is a low-order term when compared with the Theta(n)Θ(n)running time of the combine step. So let’s think of the divide and combine steps together as taking Theta(n)Θ(n) time. To make things more concrete, let’s say that the divide and combine steps together take cncn time for some constant cc.

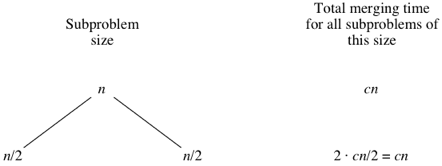

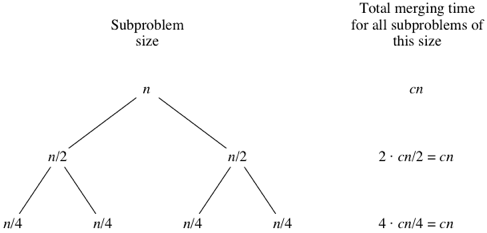

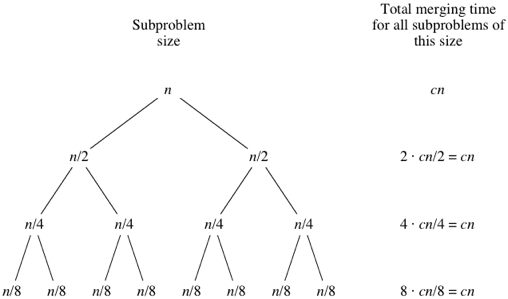

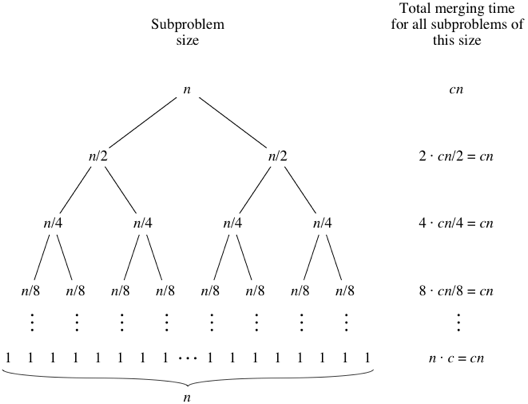

Let’s draw out the merging times in a “tree”:

Computer scientists draw trees upside-down from how actual trees grow. Atree is a graph with no cycles (paths that start and end at the same place). Convention is to call the vertices in a tree its nodes. The root node is on top; here, the root is labeled with the nn subarray size for the original nn-element array. Below the root are two child nodes, each labeled n/2n/2 to represent the subarray sizes for the two subproblems of size n/2n/2.

What do you think would happen for the subproblems of size n/8n/8? There will be eight of them, and the merging time for each will be cn/8cn/8, for a total merging time of 8 cdot cn/8 = cn8⋅cn/8=cn:

As the subproblems get smaller, the number of subproblems doubles at each “level” of the recursion, but the merging time halves. The doubling and halving cancel each other out, and so the total merging time is cncn at each level of recursion. Eventually, we get down to subproblems of size 1: the base case. We have to spend Theta(1)Θ(1) time to sort subarrays of size 1, because we have to test whether p < rp<r, and this test takes time. How many subarrays of size 1 are there? Since we started with nn elements, there must be nn of them. Since each base case takes Theta(1)Θ(1) time, let’s say that altogether, the base cases take cncntime:

When we use big-Θ notation to describe this running time, we can discard the low-order term (+1+1) and the constant coefficient (cc), giving us a running time of Theta(n lg n)Θ(nlgn), as promised.

Read more from Source: https://www.khanacademy.org/computing/computer-science/algorithms/merge-sort/a/analysis-of-merge-sort