Sorting 1 million 8-digit numbers in 1MB of RAM The problem is to sort a stream of 1 million 8-digit numbers (integers in the range $[0,: 99mathord{,}999mathord{,}999]$) using only 1 MB of memory ($2^{20}$ bytes = $2^{23}$ bits) and no external storage. The program must read values from an input stream and write the sorted result to an output stream. Obviously the entire input can’t fit into memory, but clearly the result can be represented in under 1 MB since $2^{23} geq log_2 binom{10^8}{10^6} approx 8079302$ (it’s a tight fit). So, what is the minimum amount of space needed to sort n integers with duplicates in this streaming manner, and is there an algorithm to accomplish the specified task?

Asked By : Dave Tremont

Answered By : Pedro

- $m(2^K-2) < 100’000’000$, e.g. the largest value is $<100,000,000$,

- $Km < 1’000’000$, e.g. there are at most a million values, and

- $m(K^2+1) > 8’000’000$, e.g. we use more than $8,000,000$ bits?

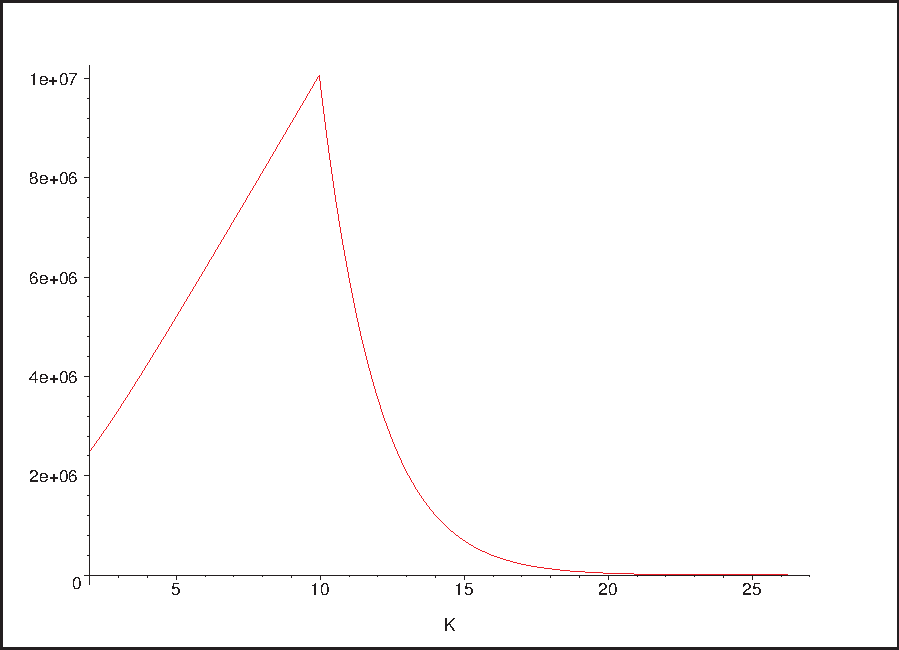

Unfortunately, this does happen. Here’s a plot of the number of bits as computed in Maple with plot( min( 100000000/(2^K-2) , 1000000/K ) * (K^2 + 1) , K=2..27 ):  So we can represent any sequence of up to one million numbers in $[0,99,999,999]$ using roughly 10 million bits. This is, unfortunately, still above the specification. But how can we sort numbers like this? Well, remember that the numbers come in one by one, so as each number comes in, we update its bucket count ($mathcal O(1)$) and compute the new optimal prefixes for each bucket ($mathcal O(loglog(i_mathsf{max}))$ if the buckets are indexed in a heap). We then run through our encoded $delta_k$, reading each entry, computing the respective $i_k$ from the cumulative sum, and comparing it to the new value. If the computed $i_k$ exceeds the new value, we encode the new value and write it back, along with the adjusted $delta_k$, using the new encoding. Otherwise, we just write back the $delta_k$ with the new encoding. But write-back where? Well, if we treat our eight million bits as one large ring buffer, we can append the new, re-coded sequence of $delta_k$ to the old sequence, re-writing the old values as we go along. We’ve got almost 1 million extra bits from the worse-case scenario, and I don’t think the re-coding can cause that amount of stretching to make this not work. All in all, this leaves us with a $mathcal O(n)$ cost for inserting the $n$th number, which gives us an average-case cost of $mathcal O(N^2)$ for a total of $N$ elements. This isn’t really good, but speed did not seem to be a criteria.

So we can represent any sequence of up to one million numbers in $[0,99,999,999]$ using roughly 10 million bits. This is, unfortunately, still above the specification. But how can we sort numbers like this? Well, remember that the numbers come in one by one, so as each number comes in, we update its bucket count ($mathcal O(1)$) and compute the new optimal prefixes for each bucket ($mathcal O(loglog(i_mathsf{max}))$ if the buckets are indexed in a heap). We then run through our encoded $delta_k$, reading each entry, computing the respective $i_k$ from the cumulative sum, and comparing it to the new value. If the computed $i_k$ exceeds the new value, we encode the new value and write it back, along with the adjusted $delta_k$, using the new encoding. Otherwise, we just write back the $delta_k$ with the new encoding. But write-back where? Well, if we treat our eight million bits as one large ring buffer, we can append the new, re-coded sequence of $delta_k$ to the old sequence, re-writing the old values as we go along. We’ve got almost 1 million extra bits from the worse-case scenario, and I don’t think the re-coding can cause that amount of stretching to make this not work. All in all, this leaves us with a $mathcal O(n)$ cost for inserting the $n$th number, which gives us an average-case cost of $mathcal O(N^2)$ for a total of $N$ elements. This isn’t really good, but speed did not seem to be a criteria.

Best Answer from StackOverflow

Question Source : http://cs.stackexchange.com/questions/6236